Massachusetts, United States (Enmaeya News) — Artificial intelligence can double the likelihood of planting false memories, even when people know the material is AI-generated, according to researchers at the Massachusetts Institute of Technology and the University of California, Irvine.

The study builds on five decades of work by psychology professor Elizabeth Loftus, whose research shows how easily memories can be altered — sometimes convincing people of events that never happened, especially under questioning by prosecutors or police.

The team found that manipulation can occur even when people know the texts and images they are seeing were created by AI. In some cases, AI doubled the chances of planting false memories.

Memory Isn’t a Recording

In famous experiments beginning in the 1970s, Loftus showed that certain suggestions could create false memories. For example, people could be made to believe they got lost in a shopping mall as a child or got sick from eating certain foods. These false memories were strong enough to make people avoid those foods later.

Many people still think memory works like a video recorder, which makes them easier to trick, Loftus said. “Memory is a creative process,” she explained. The brain puts together memories from bits and pieces collected over time. Forgetting doesn’t just mean losing memories — it can also mean adding things that never happened.

Loftus also studied “loaded polls,” where questions include false information, like asking, “What would you think of Joe Biden if you knew he was convicted of tax evasion?” She warns that AI could use these tricks on a large scale.

Not Deepfakes—Memory Fakes

Pat Pataranutaporn of MIT’s Media Lab says altering memory is different from fooling people with deepfake videos. “You don’t need to create a fake version of The New York Times,” he said. “It’s enough to convince someone they once read something there. People generally don’t doubt their own memories.”

Pataranutaporn led three memory experiments. In the first, participants watched a video of an armed robbery. Some were asked misleading questions, such as whether there was a security camera near where the robbers parked their car. One-third later remembered seeing the robbers arrive in a car, even though no car appeared in the video. The false memory persisted a week later.

Participants were divided into three groups. One group received no misleading questions, the second group received misleading questions in writing, and the third group was asked misleading questions by a chatbot.

The group questioned by the chatbot formed false memories 1.7 times more often than the group that received written misinformation.

When AI Rewrites The Story

In another experiment, researchers showed that AI-created summaries or conversations with false information can add fake details to a story.

People who saw these changed versions remembered fewer true facts and felt less sure about what they remembered.

A third study looked at how images and videos might cause false memories. 200 volunteers were shown 24 photos — some from the news, others personal photos.

Later, they saw one of four versions: the original photos, photos changed by AI, those AI-changed photos turned into short videos, or completely AI-made images turned into videos.

Even people who saw the original photos made some mistakes in what they remembered. But those who saw AI-changed images or videos were more likely to have false memories. The most false memories happened in people who watched videos made from fully AI-created images.

Younger people were more likely than older people to remember things that didn’t happen. Education level didn’t make a difference.

All participants knew from the beginning that the pictures and videos were made by AI.

Between Truth and Fiction

Some changes to the images were small, like adding soldiers to a public gathering or changing the weather, while most of the original picture stayed the same. Experts say false information works best when about 60% of it is true.

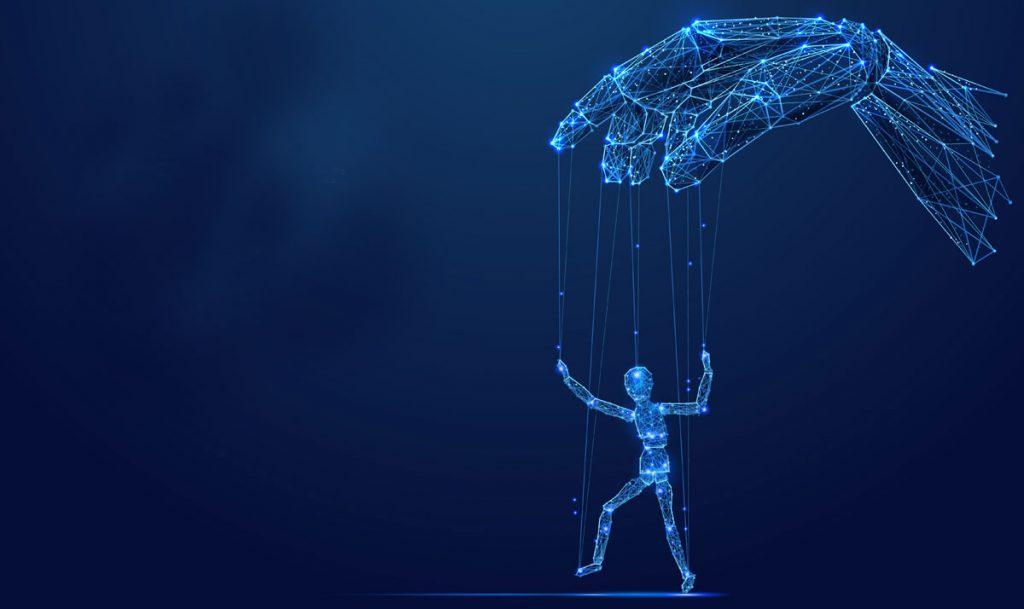

The researchers say their results show that AI can affect how we see reality in ways beyond just spreading fake news. Social media algorithms already promote extreme ideas and conspiracy theories by making them seem more popular than they really are.

AI chatbots might have even more hidden and unpredictable effects. Experts warn that we should be ready to change our opinions based on facts and strong evidence, but also stay alert when someone tries to change what we believe by twisting what we see, feel, or remember.