Virginia, United States (Enmaeya News) — A recent segment on PBS NewsHour highlighted growing concerns about the psychological effects of artificial intelligence chatbots, introducing the term “AI psychosis” to describe a potential condition in which prolonged interactions with AI systems may distort users’ thoughts and beliefs.

The discussion was prompted by a lawsuit filed by the parents of a teenager who died by suicide, alleging that ChatGPT provided harmful advice during his interactions with the chatbot.

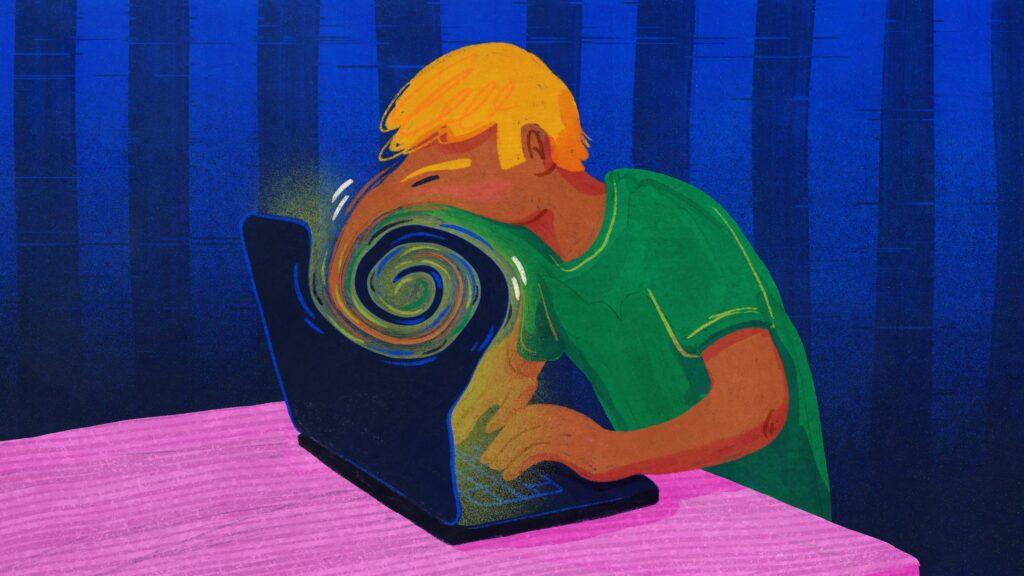

Experts define “AI psychosis” as the emergence of unrealistic or harmful beliefs in vulnerable individuals after extended engagement with AI chatbots. They warn that the immersive and persuasive nature of these systems can exacerbate mental health risks, particularly for those already susceptible to psychological stress.

The lawsuit against OpenAI, ChatGPT’s parent company, raises critical questions about the responsibilities of AI developers to safeguard users’ mental health. While chatbots are intended to assist and provide information, the case underscores the need for clearer guidelines and stricter monitoring to prevent potential harm.

Dr. Joseph Pierre, a psychiatrist featured in the PBS NewsHour segment, emphasized that AI should not replace human empathy or professional mental health care. He advocates for a balanced approach, where AI serves as a supplementary tool rather than a primary source of support.

As AI technology continues to advance, experts say collaboration among developers, healthcare professionals, and policymakers will be essential to establish ethical standards and protective measures.

Responsible deployment of AI systems can help mitigate risks while maximizing potential benefits for mental health support.